From Handling noisy data in multivariate learning to multilevel time series forecasting

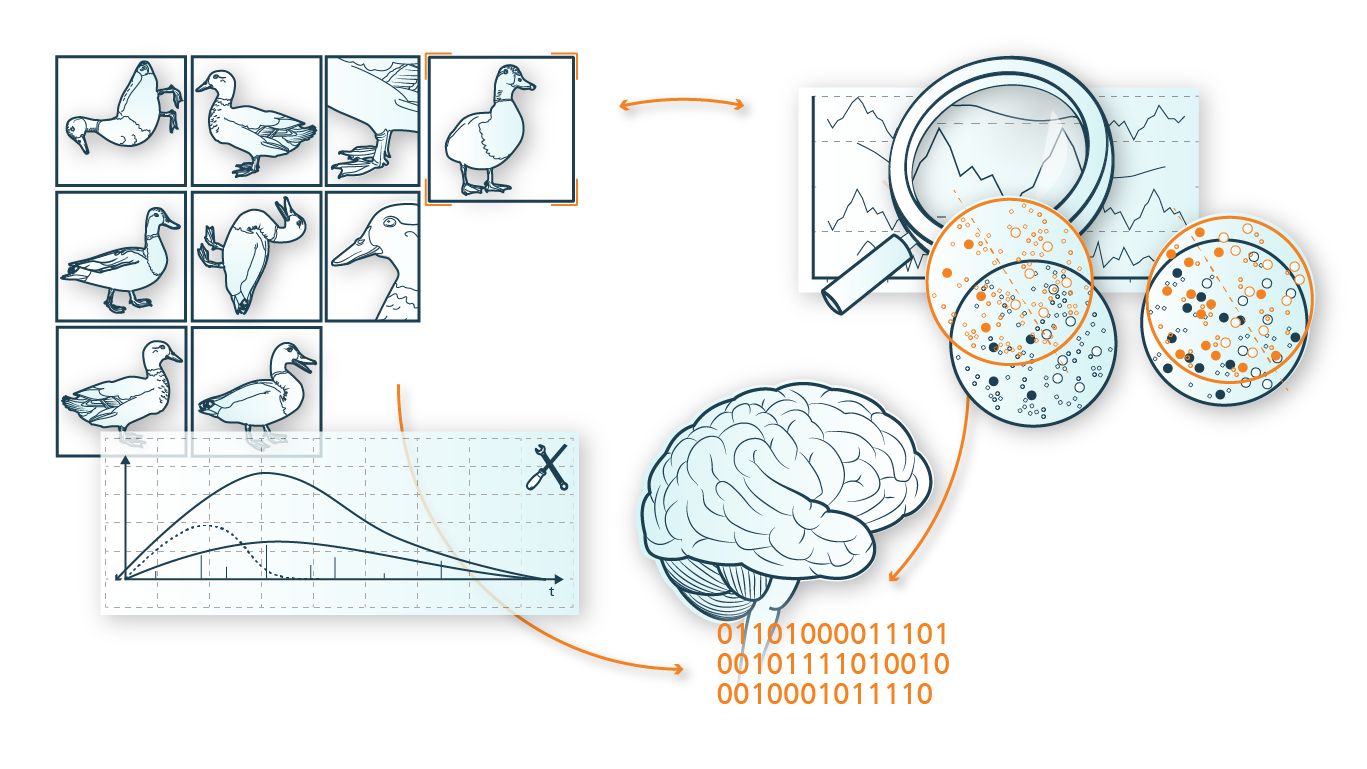

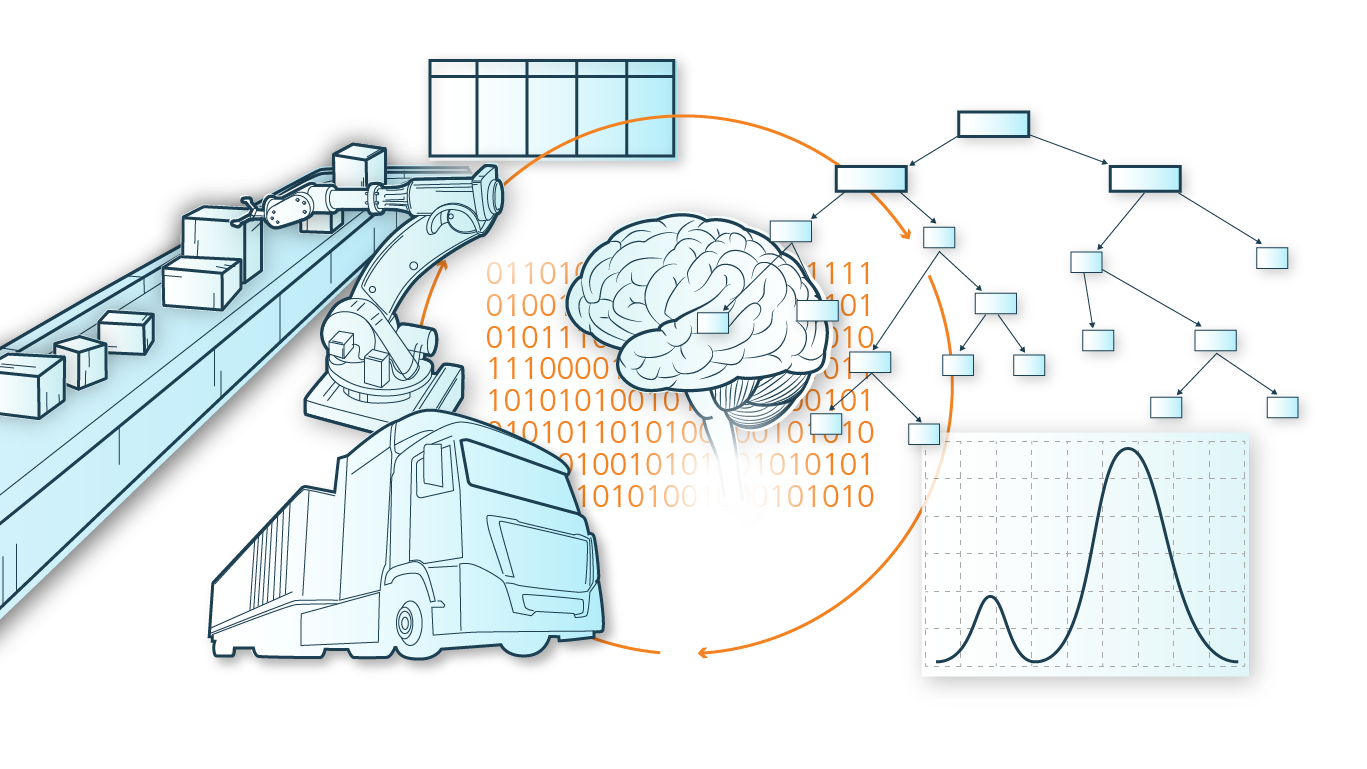

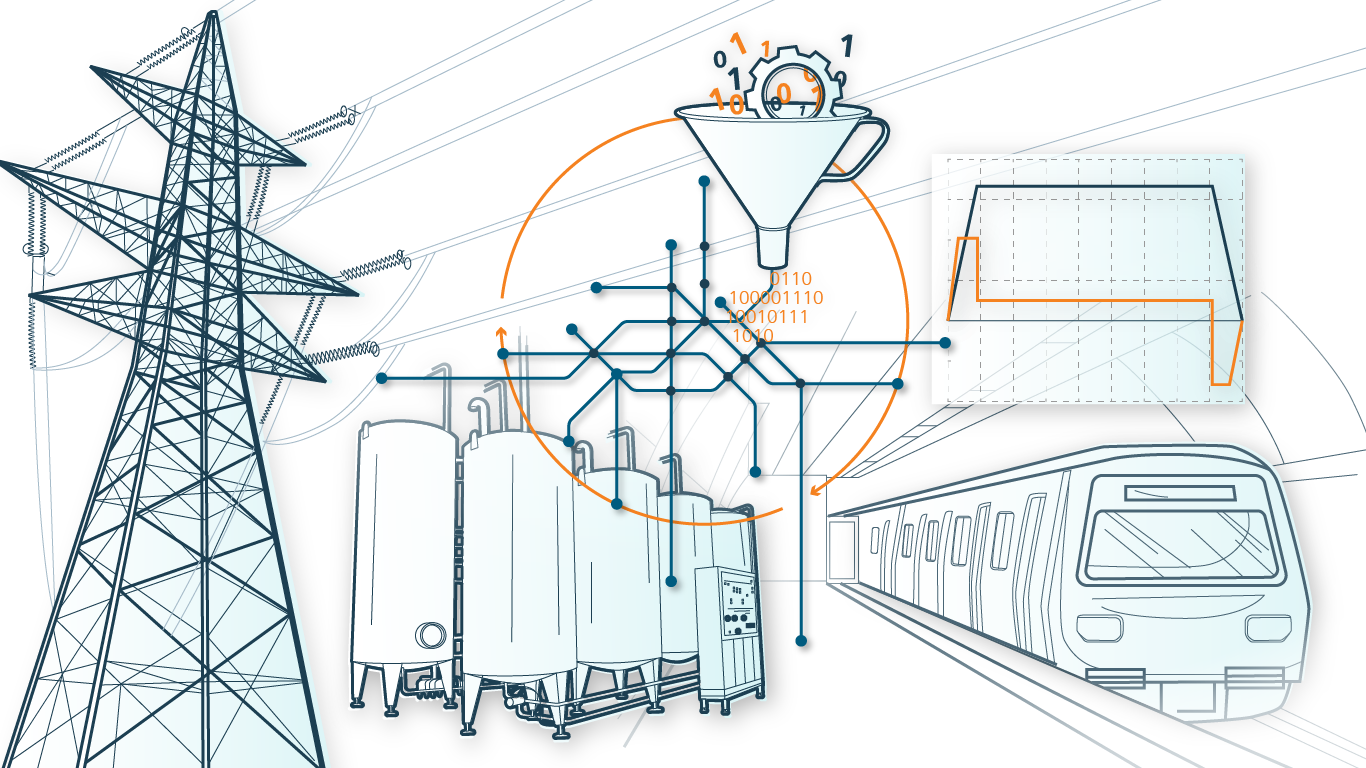

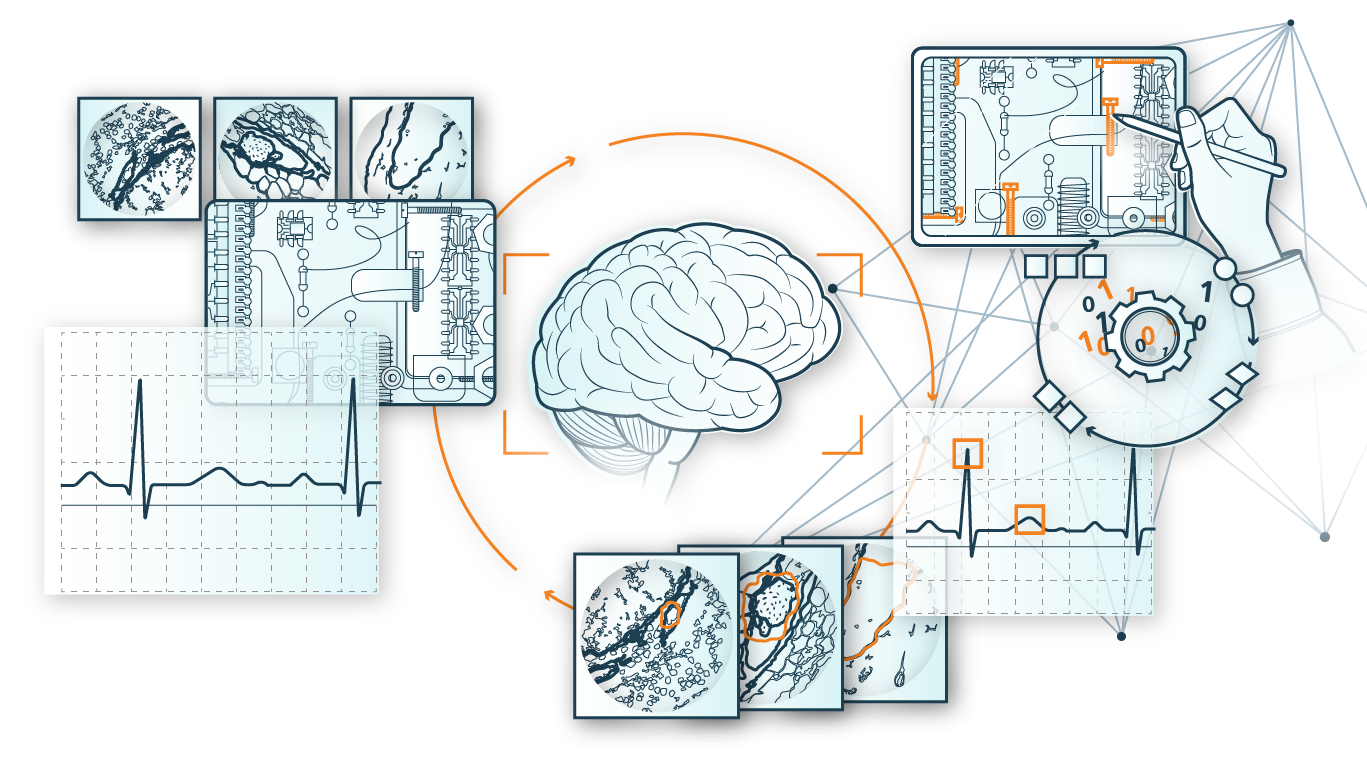

Research on time series analysis has gained momentum in recent years, as insights from time series analysis can improve the decision-making process for industrial and scientific domains. Time series analysis aims to describe patterns and trends that occur in data over time. Among the many useful applications of time series analysis, classification, regression, forecasting, and anomaly detection of time points and events in sequences (time series) are particularly noteworthy as they contribute important information to, for example, business decision making. In today's information-driven world, countless numerical time series are generated by industry and research on any given day. Many applications - including biology, medicine, finance, and industry - require high-dimensional time series. Dealing with such large datasets brings up several new and interesting challenges.

Challenges in natural processes

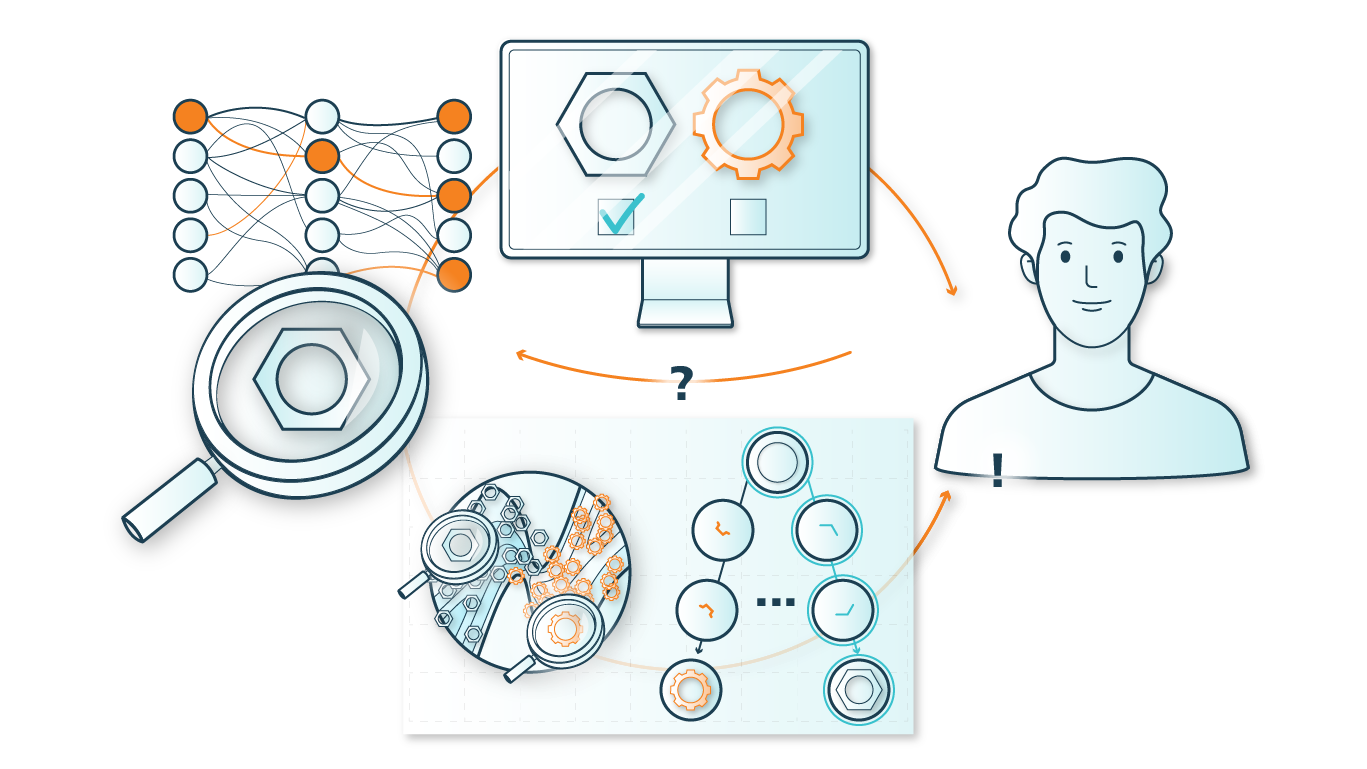

Despite significant developments in multivariate analysis modeling, problems still occur when dealing with high-dimensional data because not all variables directly affect the target variable. As a result, predictions become inaccurate when unrelated variables are considered. This is often the case in practical applications such as signal processing. Natural processes, as we find in the applications mentioned below, process data described by a multivariate stochastic process to account for relationships that exist between individual time series.