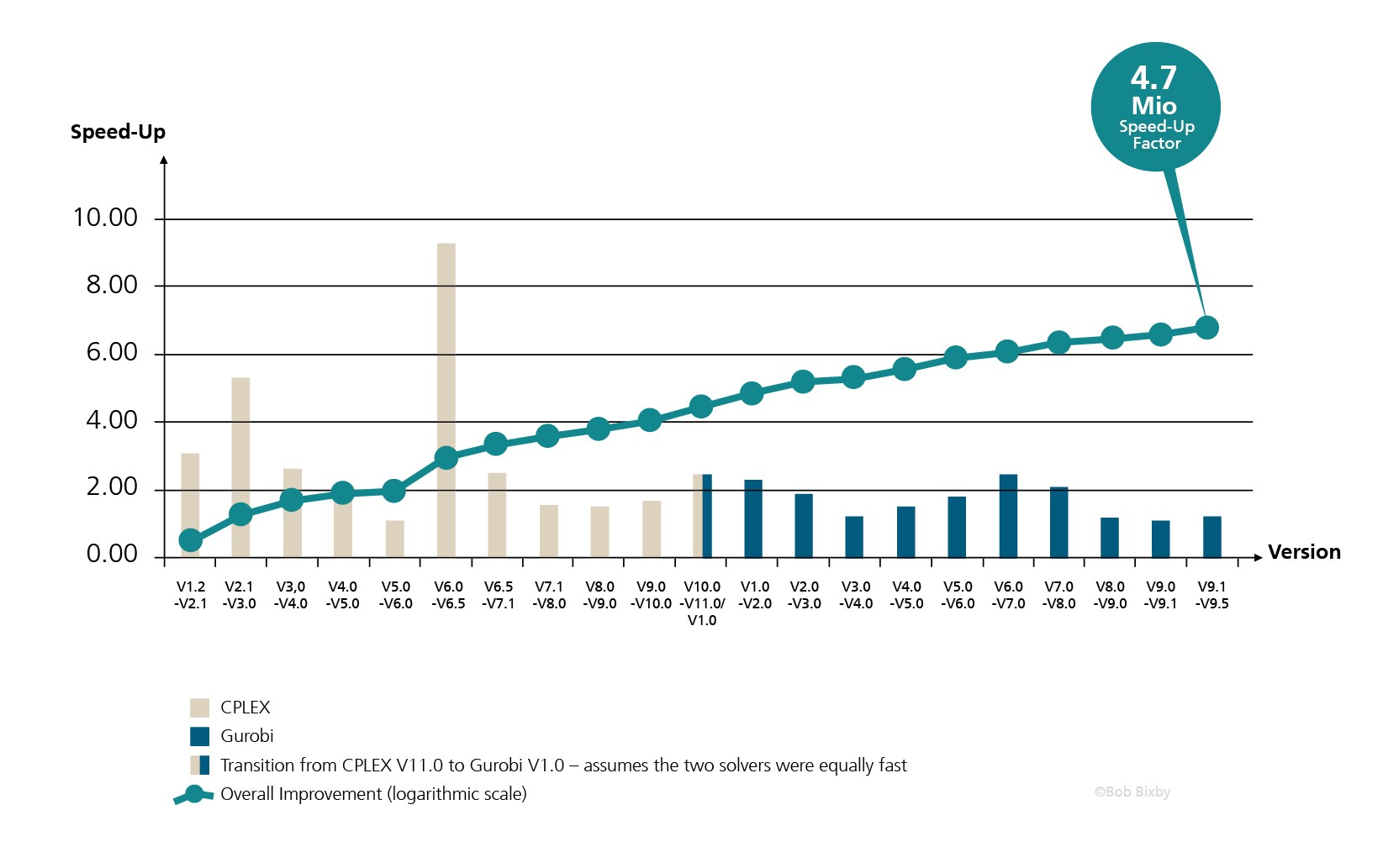

As can be seen, the development of better mathematical methods alone has resulted in a speed-up factor of 4.7 million. In comparison, the speed-up factor due to better hardware is just 1600. All in all, this means that optimization problems can now be solved within 1 second that seemed unsolvable 25 years ago.

From planning to real-time optimization

The partners in the ADA Lovelace Center have been developing efficient mathematical methods for planning problems of all kinds for many years. This includes, for example, the fields of transportation and logistics, power generation and transmission, supply chain management, building infrastructures, automotive, finance, and engineering applications. At the same time, optimization-theoretic problems are examined and their results are used, for example, in the acceleration of general solver codes.

Recently, the integration of machine learning methods with optimization methods has opened up a whole new field in terms of moving from offline optimization problems (with known, static parameters) to online optimization problems in real-time applications (under dynamic, time-varying parameters). For the often highly dynamic constraints of today's applications, e.g., logistics chain planning, the availability of such methods will be of particular importance. For example, we have shown that it is possible to derive priorities and cost functions in planning problems from observed past decisions. The ability to derive explicit planning rules from past decisions therefore provides greater automation and objectifiability of the planning decisions to be made. The further development of such approaches within the framework of learning methods is of particular importance at the ADA Lovelace Center. Our work makes it possible for the first time to turn the quality guarantees of these methods, which have so far only existed in theory, into practically usable algorithms that provide the user with a decisive competitive advantage.

Co-learning mathematical decision models by combining data analysis and optimization

Data analysis and optimization have traditionally been two separate, sequential steps in the development of decision support systems. However, this approach is increasingly reaching its limits for several reasons: In the age of increasingly large data sets, simply selecting the parameters relevant to an optimization problem is a major challenge. It is also unclear which characteristics of a system are relevant for making good planning decisions, or which effects have an influence on the performance of the selected plan. Here, the integration of approaches from data analysis and optimization gains importance. Very promising for the future seems the way to start with generic base models to describe an application and to learn both the evaluation function and the specific constraints from the available data. This form of automatic model building will be much more appropriate for today's constantly changing planning conditions, where one often has to deal with a constant incoming of new data that must be immediately incorporated into the next control action for the system to keep it in the optimal state.

In particular, the ADA Lovelace Center develops techniques that are able to adapt to the dynamics of a given system and make optimal decisions for it. As a result, the decisions »learned« in this way will, over time, and with more training data, increasingly reflect real-world, time-varying conditions. The exploitation of this technique for the derivation of optimization models paves the way towards self-learning mathematical decision models, which represent a major advance in the field of automatic planning and control. Methods previously available for this problem, such as multilevel stochastic optimization, often fail here because of their exorbitant computational cost. Online learning offers an alternative because its philosophy involves considering each data point only once, which leads to significant decoupling along the temporal axis and thus to significantly lower computational complexity. It also ensures that no more recent knowledge can be forgotten, as more distant observations are given significantly less weight than recently recorded data.

Learning optimization methods

Learning optimization methods are also an essential contribution to a better understanding of a given system. In many areas of practical planning in a company, the decisions made are based on implicit, non-formalized rules (sometimes »gut decisions«). These are not bad in themselves, but are often difficult to understand and thus difficult to automate. Also, this form of expert knowledge is often lost when the responsible employees change companies or retire. Learning optimization models enable a company to cast the planning knowledge that is implicitly available at the company into a mathematical form that allows this knowledge to be made understandable and usable for automated decision making. The ADA Lovelace Center is one of the pioneers in this field of research and provides significant impulses in a field that is capable of increasing the competitiveness of German industry quite decisively.

Another challenge of modern optimization methods is the reduction of model complexity. This is of immense importance especially in view of the ever increasing amounts of data that have to be included in decision making. Modern mathematical methods promise a remedy for the dilemma that exact integer optimization methods usually have a runtime that grows exponentially with the input size. Separating relevant from irrelevant parameters by integrating data analysis techniques and optimization algorithms allows signal and noise to be distinguished from each other even with very large data sets. This representative selection of effects in the objective and constraints of the chosen optimization model can thus significantly reduce computation time. An example of this is the recent development of learning algorithms for selecting representative scenarios in optimization under data uncertainty. The latter represents a technique for making decisions that remain high quality and actionable under uncertain input data (e.g., based on measurements or forecasts). Such algorithmic methods, which allow the selection of representative scenarios among a large variety of conceivable scenarios, are an important contribution to the applicability of these methods in practice. Here, too, the ADA Lovelace Center is setting internationally visible accents with its research.