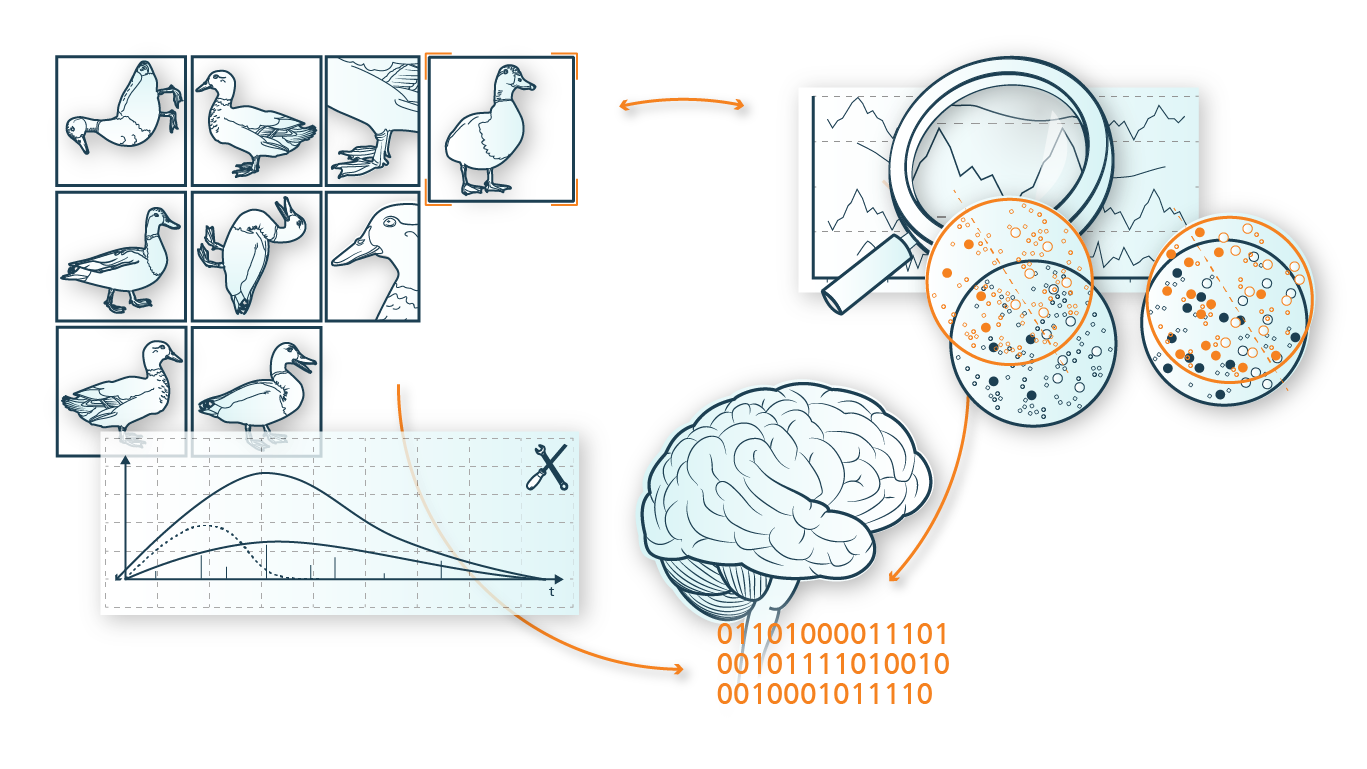

In the last few years, there were groundbreaking Artificial Intelligence (AI) applications in many research and day-to-day business fields. Apart from novel algorithmic development and increased computational resources, the main fuel behind these succusses was the availability of huge amounts of annotated data. Modern AI algorithms try to discover patterns within static data or construct models that can predict the »correct« output – as indicated by a »teacher« – for a set of given inputs.

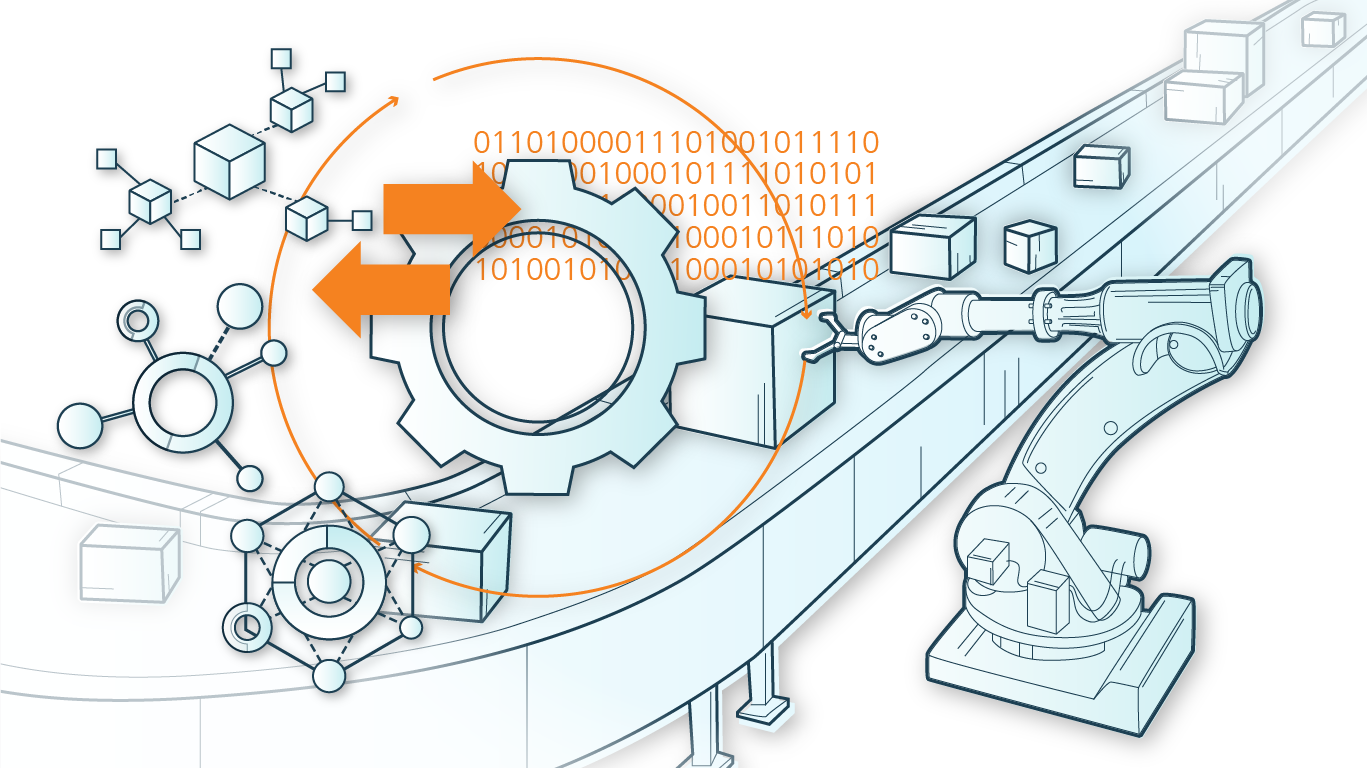

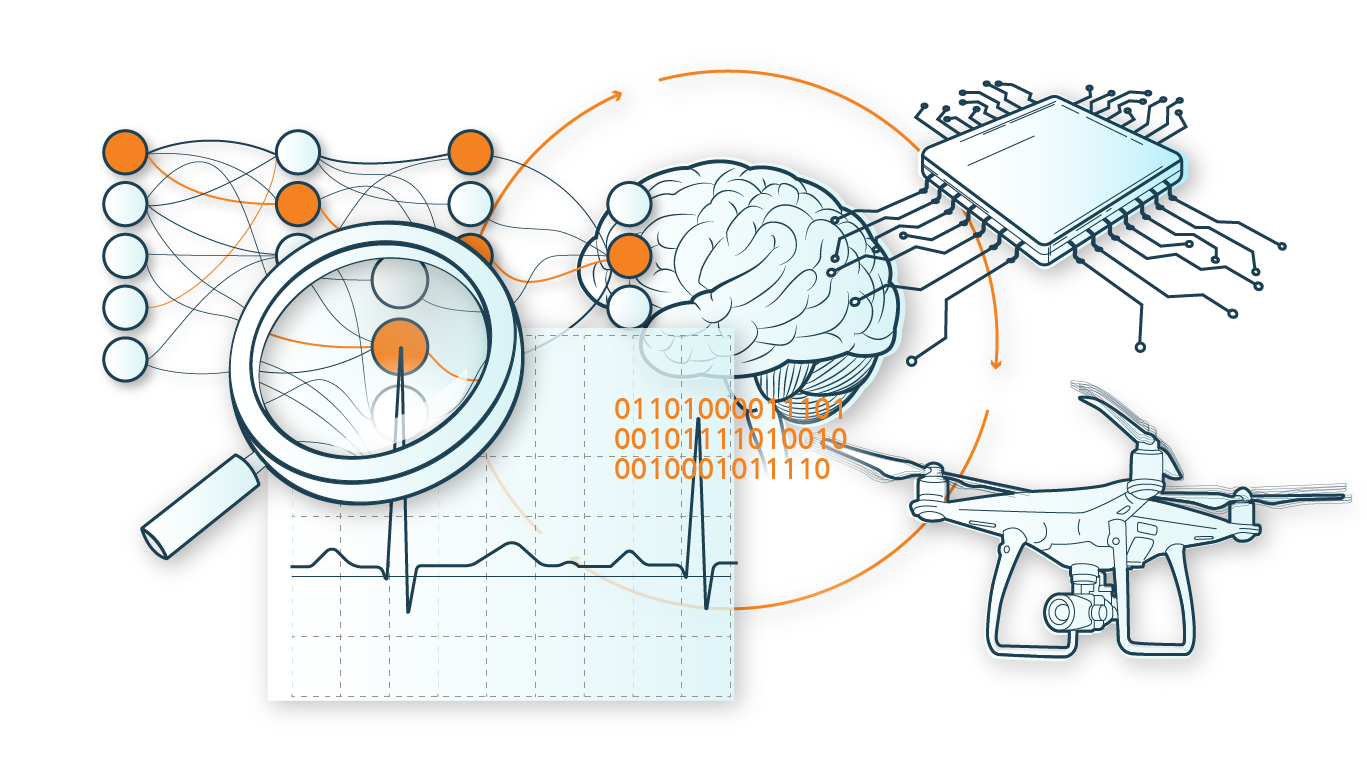

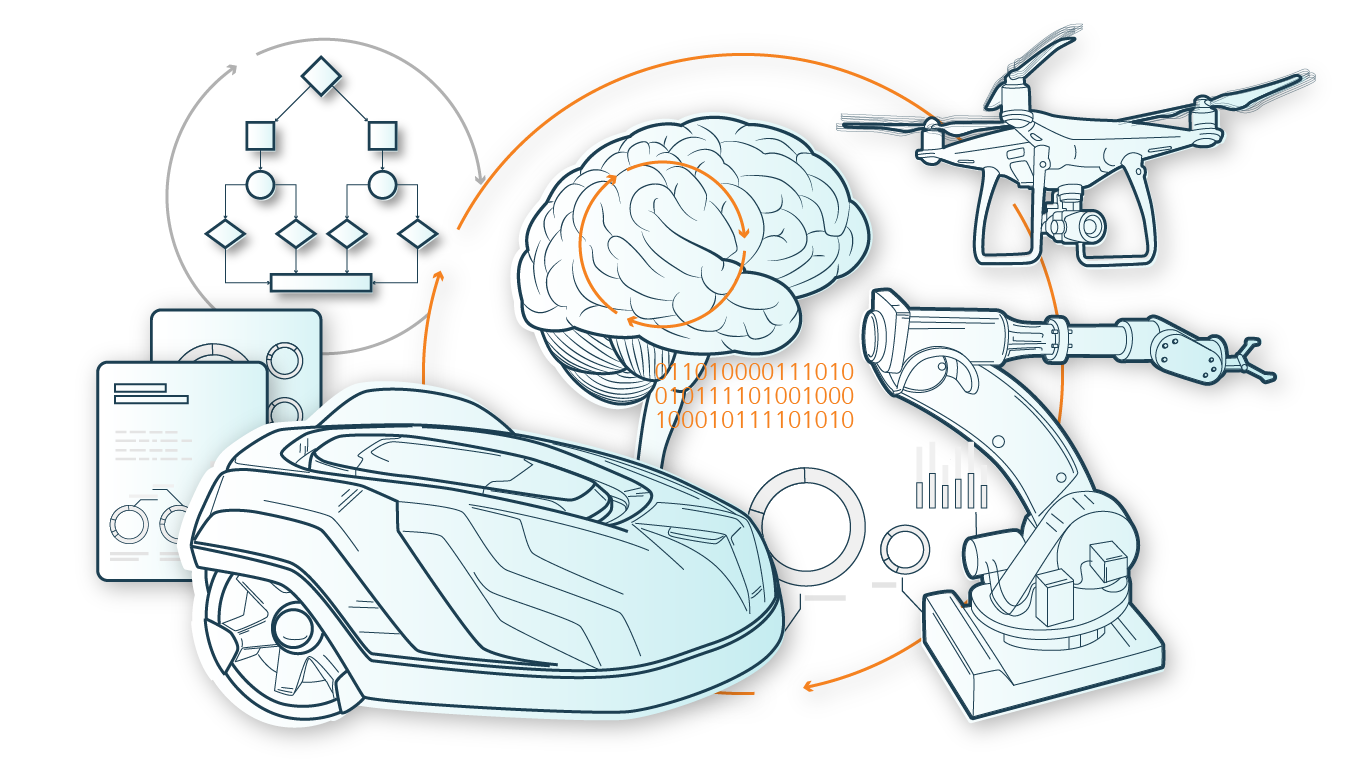

In order to take the next step in AI though, meaning to design truly autonomous systems that will be able to operate in the real world and interact with humans, such as autonomous vehicles, packet delivery drones or even intelligent production systems, we need algorithms with different properties, since the requirements are different: autonomous systems need to operate safely without supervision, making a series of decisions towards achieving a goal and being able to adapt to unforeseen situations due to the complexity of the world.

Experience-Based Learning is a central component towards designing such autonomous systems. Here, the behavior of the system is not pre-defined with a set of static rules or static machine learning models trained offline, but the system constantly improves its behavior by collecting new data from the environment.

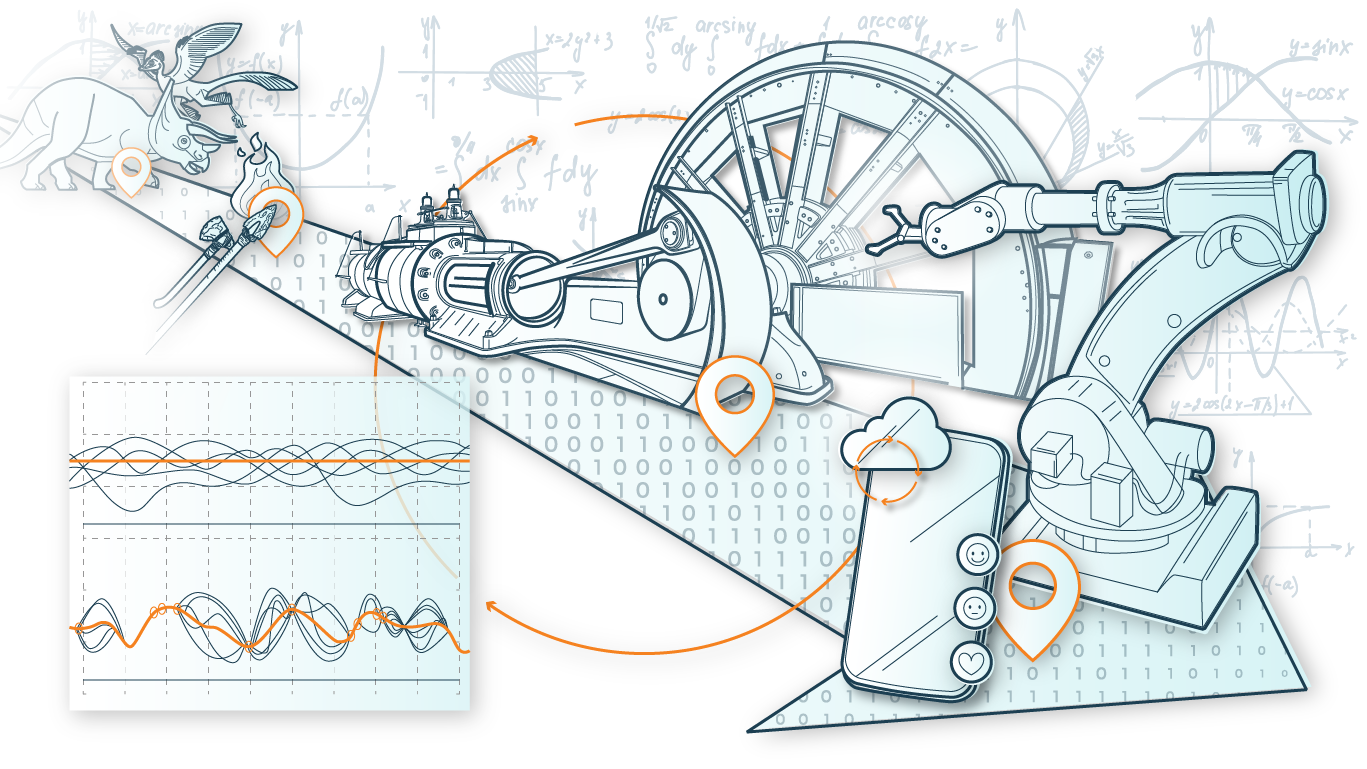

The field of Reinforcement Learning facilitates the training of agents that discover a strategy (also called a controller or policy) that improves their performance by interacting with the environment through a trial-and-error process, thus providing the theoretical foundation and a large ecosystem of algorithmic approaches for experience-based training of autonomous systems.

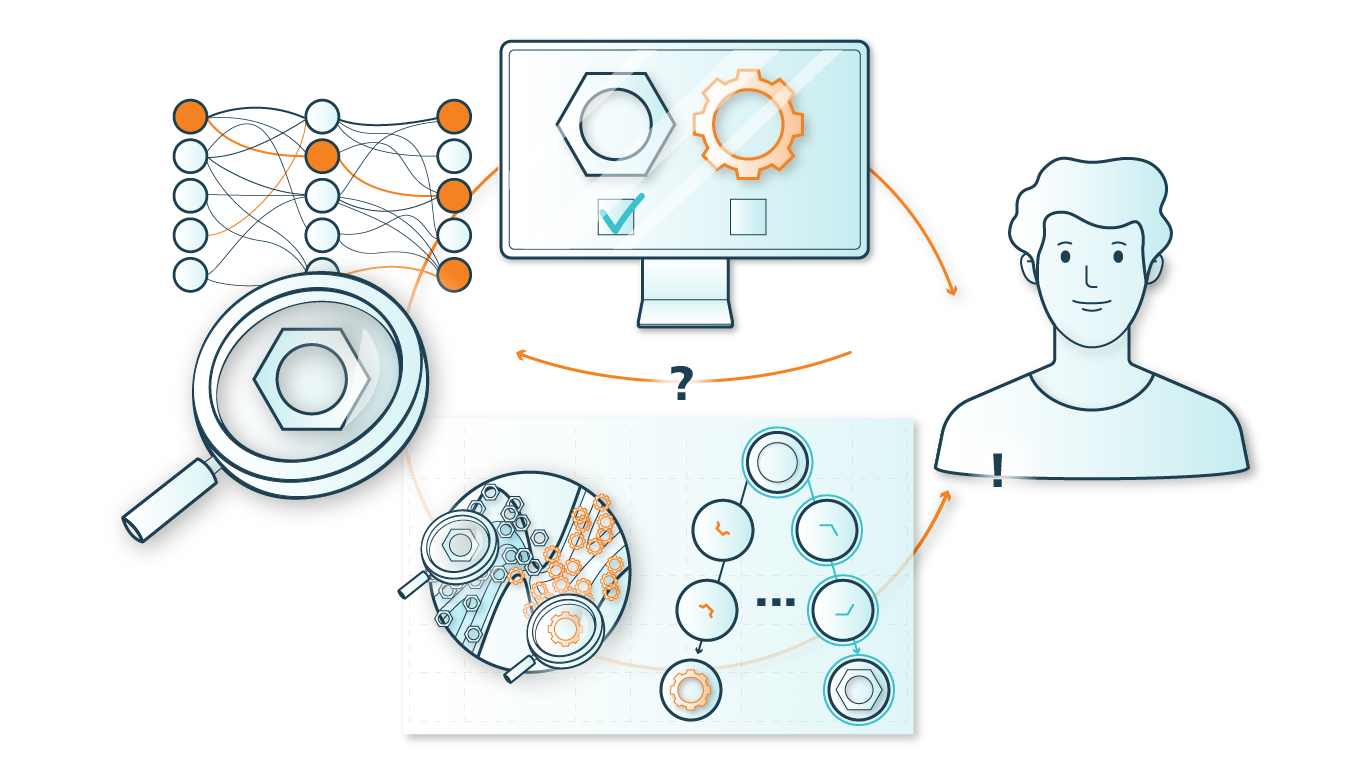

Our main drive in the »Experience-Based Learning« pillar is to support the transfer of ideas, algorithms and success stories of Reinforcement Learning Research to Industrial Applications of Autonomous Systems. To achieve this, we focus on approaches that lead to Dependable Reinforcement Learning.