We demonstrate that effective driving strategies in critical situations can be learned through simulation. This demonstrates the strength of reinforcement learning: millions of driving hours in the simulator enable the AI agent to master even difficult driving situations with ease. We evaluate a wide range of highly varied scenarios. Driving behaviour is optimized for various factors, such as occupant safety by taking collision zones into account.

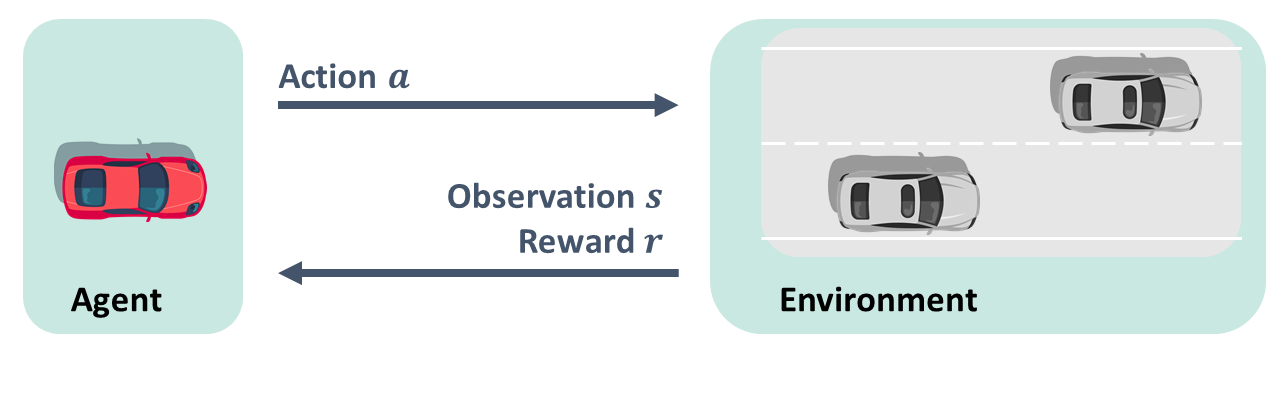

With SafeDQN, we have implemented a clear approach that learns from its own mistakes. Again, we see the architecture of the reinforcement learning agent for a constrained problem. It receives the state and the reward and must adhere to constraints. For each state, it must learn what the best action is. Two neural networks are trained, one for the benefit of the actions, it optimizes driving with regard to reaching the goal quickly. Another network is trained for the risk of the actions; it learns independently from mistakes which traffic situations are risky. Both are trained together for all possible actions and a combination factor is determined during training, which leads to the selection of the optimal action.

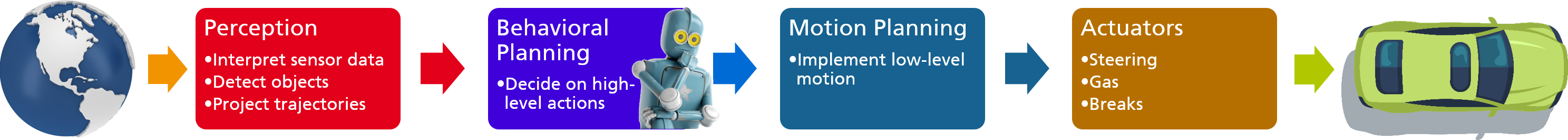

With the SafeVIPER approach, we have shown how to become even safer and more comprehensible for a specific situation. The task is to overtake several vehicles, for example on the highway. The car in front of you may be faster than the one in front of you and may overtake you. The task is to find the optimum time to change lanes. The SafeVIPER algorithm works in three steps. During training, a reinforcement learning agent is trained with a neural network. A restricted Markov process is considered in the safe training, which forces the agent to maintain a safe distance. During extraction, imitation learning is used to learn a decision tree with the trained neural network. Our safe extraction implements three extensions for safety. During verification, we now take advantage of the fact that the decision tree can be easily converted into propositional logic. To do this, we also formulate the dynamics of the environment and an accident using logical formulas. If we now run an equation solver on the summarized formula decision tree and environment leads to accident, and it does not find a satisfying assignment, we have proven that our agent is safe.